Tech Against Terrorism presents here the latest edition of the Online Regulation Series. This compendium navigates the complex online regulatory landscape requiring tech platforms to prevent the dissemination of illegal and harmful content on their services.

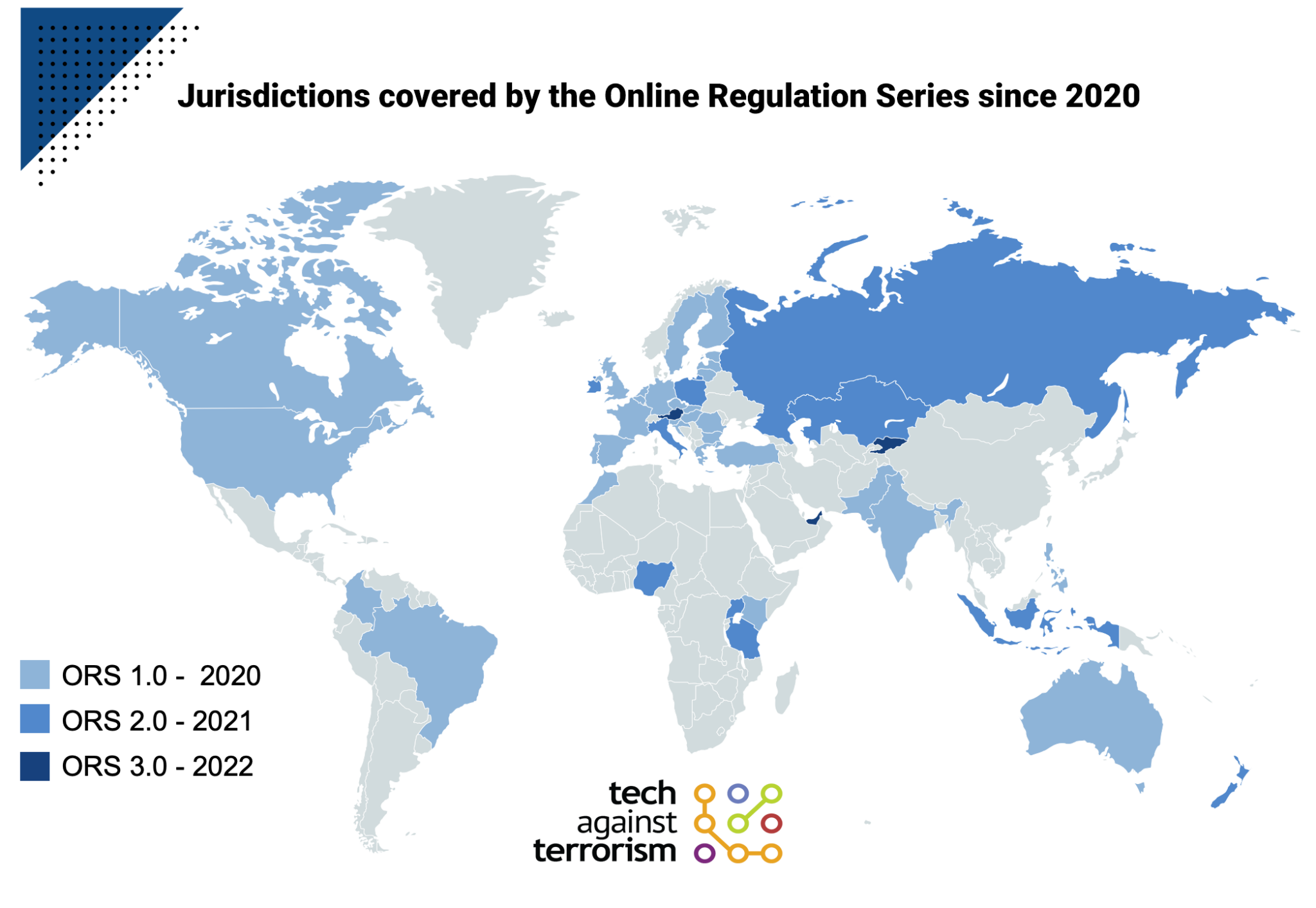

By monitoring over 100 pieces of legislation from 30 jurisdictions around the world, we have tracked the evolution of legislation impacting online content and how it affects service provider efforts to counter terrorism and violent extremism.

The Online Regulation Series 3.0 Handbook is not only a crucial resource for tech platforms but also invaluable for policymakers and counterterrorism experts attempting to make sense of the fast-changing legal landscape.

In compiling the Online Regulation Series 3.0, we have sought to answer the following questions:

- How is the global regulatory landscape evolving?

- What are the implications for platforms’ online counterterrorism efforts?

- How can we inform efficient and rights-safeguarding legal responses to terrorist use of the internet?

In the 2023 edition of the Online Regulation Series, we raise concerns about the latest trends in online regulations and outline key recommendations for the policymakers who design the rules.

Recommendations include:

- Reconsider strict removal deadlines: account for the size and capacity of tech platforms to take down identified terrorist or violent extremist content, and avoid unnecessary and overzealous content removal.

- Improve definitional clarity of terrorist and illegal content: correctly identifying terrorist and violent extremist content represents a significant challenge for tech companies. Policymakers should provide clear frameworks and guidance for tech companies to identify terrorist content correctly and swiftly on their services.

- Require transparency reporting proportionate to tech companies’ size, context and capabilities: transparency reporting is an excellent tool to hold tech platforms to account for their efforts in preventing the terrorist exploitation of their services. However, such an obligation must be tailored to the tech platform’s size, capacity, and the market they operate in.

- Empower platforms to act on their own guidelines: tech platforms uphold their community guidelines as a crucial contract of trust with their users. Regulations should empower companies to act on these guidelines, which are an important means by which platforms can adapt their moderation practices to keep pace with the evolving online threat.

- Engage in public consultations: Any proposed legislation and regulation for online activities should be accompanied by public consultations, allowing for greater transparency in the formulation of policy.

Jurisdictions under consideration include Australia, Austria, European Union, India, Kyrgyzstan, New Zealand, Singapore, United Arab Emirates, United Kingdom and the United States.

Background

In 2017, Germany ushered in a new era in online regulation when it passed the Network Enforcement Act (NetzDg) and became one of the first countries to require platforms to prevent the spread of illegal and harmful content online, introducing a 1-hour removal deadline for terrorist content.

Since then, there has been a raft of similar legislation and regulations enacted around the world. Beginning in 2021, the Online Regulation Series has attempted to make sense of the emerging and often fragmented regulatory landscape, assessing whether each regulation reviewed meet its stated aim of countering illegal online content and terrorist use of the internet, as well as any present risks to human rights and tech sector diversity. We also commend legislation passed with regard for the rule of law and due process, and which provides the necessary human rights safeguards.

Policymakers’ increased interest in regulating online content has led to a complex and multifaceted landscape of laws requiring platforms to prevent the dissemination of illegal and harmful content on their services. In 2017, Germany passed the Network Enforcement Act (NetzDg) and became one of the first countries to require platforms to prevent the spread of illegal and harmful content online, introducing a 1-hour removal deadline for terrorist content. This marked a turning point in online regulation, which was followed by a global wave of regulatory discussions around content governance and the removal of illegal or harmful content.

Campaign to Alert Tech Companies of New European Terrorist Content Online Regulation

Tech Against Terrorism Europe will lead efforts to drive both greater awareness of and compliance with the European Union’s new regulations to tackle...