Tech Against Terrorism

Transparency reporting for smaller platforms

| Transparency reporting is an important way for the tech sector to increase awareness of its internal content moderation decision-making processes. Reporting can also increase transparency around information and takedown requests made of tech platforms by external entities, such as law enforcement agencies or governments. With regards to terrorism and online terrorist content, transparency reporting helps increase public understanding of the extent of the threat and ensures accountability for counterterrorism efforts carried out by both the tech sector and governments. Currently, around 70 companies publish transparency reports, with 4 reporting specifically on terrorist content. Civil society groups and academics have long noted the importance of transparency reporting around content moderation practices in general and terrorist content specifically. Despite no government publishing transparency reports on their referrals and removal requests, governments have recently started to push for increased tech sector transparency. Several legislative proposals – for example the proposed EU regulation on online terrorist content and the UK Online Harms White paper – or newly implemented laws, such as Germany’s NetzDG, mandate transparency reporting. Challenges for smaller tech companies However, many of the smaller platforms that are exploited by terrorist groups struggle to publish transparency reports due to lack of capacity: without automated data capturing processes in place, compiling and publishing a transparency report can be time and labour intensive. In our analysis of IS use of smaller platforms, we identified more than 330 platforms being used. The majority of these platforms are smaller or micro-platforms managed by one person only. Since this is where a large part of the threat lies, it is important that we encourage these companies to be more transparent whilst realising that transparency needs to be both practical for smaller companies and meaningful for observers. Placing initial demands that are too high might discourage companies from reporting or, in the case of legal obligations, lead to smaller companies being unable to compete with larger counterparts. In addition, the lack of consensus on the definition of online terrorist content leads to varying reporting methods across platforms and lack of clarity on what content is being sanctioned as part of efforts to tackle terrorist use of the internet. Companies also have distinct content policies based on their intended audience, meaning that they will inevitably – particularly in the absence of international consensus around this terminology – have different definitions of terrorist content. This is complicated by the fact that different platforms are based on different technologies. Therefore, a one-size-fits-all approach ¬– which we fear may be the result of legally mandated transparency reporting – is illogical. Further, transparency reporting should not be used as an indirect way to impose definitions on companies in the absence of government-led efforts to improve international consensus based on the rule of the law. |

|

Our solution: supporting smaller tech companies in building sustainable process

Before smaller platforms consider transparency reporting they first need to have the resources and tools to develop their own content moderation policies and enforcement mechanisms.

At Tech Against Terrorism, we have worked with the tech sector since 2017 to support them in all steps of the process of implementing mechanisms to counter terrorist use of their platforms, from drafting robust Terms of Service and/or content standards to practical content moderation and to compiling transparency reports.

As part of our Mentorship programme for smaller companies – carried out on behalf of Global Internet Forum to Counter Terrorism (GIFCT) membership – we work with companies to help them meet the GIFCT membership criteria. This programme includes supporting smaller companies in producing their first ever transparency report or improving upon existing reporting. In the past year, we have provided specialised mentorship to 12 smaller companies.

Further, transparency reporting is a core part of our workshop syllabus and over 120 platforms have participated in our transparency report training. All of this work is in accordance with international human rights principles and the Tech Against Terrorism Pledge, which is based in international norms and laws.

Going forward In 2020, we pledge to do even more. On 8 April, we will host an e-learning webinar together with the GIFCT aimed at offering practical advice to tech companies around transparency reporting. We will also improve our practical support mechanisms for companies around transparency reporting to facilitate data collection, management, analysis, and report production. To inform this process, we will launch a consultation process for smaller tech platforms to help guide our development over the next month. |

|

Recommendations

To facilitate content moderation and transparency reporting for smaller companies, we recommend using the UN Security Council Consolidated List as a baseline for groups that should be considered terrorist. Although this list is not perfect (there are no far-right terrorist groups, for example) it does create a common baseline that is based on international consensus. To facilitate consensus around terrorist content, logos of groups designated in the UNSC list should be tied to the sanctions scheme, meaning that content carrying designated group logos is considered terrorist content. Such a measure would facilitate automated solutions aimed at detecting terrorist content for smaller companies.

Recommendations for governments

- Increase transparency reporting on government practices, including internet referral unit requests, to counter terrorist use of the internet

- Facilitate support to the wider tech sector, in particular smaller tech companies, for generating transparency reporting to allow for increased insights into PTUI

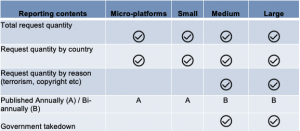

- Balance expectations about tech company transparency reporting, supporting meaningful transparency whilst accounting for pragmatic considerations, ideally segmenting expectations according to company size and capacity

- Encourage coordinated efforts around smaller company transparency and facilitate automated reporting via the Terrorist Content Analytics Platform (TCAP)

Recommendations for tech companies

- Larger tech companies, including the GIFCT, should – to the extent it is legally possible – strive to provide clarity on key metrics. These include providing reasons behind government takedown requests, data on government removal requests based on company Terms of Service (ToS), more detailed information on Terms of Service and/or Community Guidelines enforcement, and metrics on user appeals for redress

- Smaller tech companies should strive to produce transparency reports in line with their practical capacity, for example based on the draft guidelines shared below, and in collaboration with Tech Against Terrorism

- All tech companies should consider (in accordance with their practical capacity) accompanying their Terms of Service with relevant definitions, law enforcement guidelines, as well as details of their content moderation policies and decision-making processes. Tech Against Terrorism can support companies in this process

If you are a tech company who wants to learn more about our on transparency and have access to some of our resources, please get in touch on contact@techagainstterrorism.org