We interrupt this broadcast for a special announcement:

Tech Against Terrorism is currently expanding its expertise on the use of end-to-end encryption (E2EE), and we are trying to deepen our comprehension of how online users and the general public perceive encryption, and the wider “encryption debate”.

To inform our understanding of this complex discussion, we would be interested in hearing more from you and how you perceive encryption, in particular E2EE, as a user of online services. To this end, we kindly ask you to spare a few minutes of your time to respond to our short and anonymous survey.

Headline news

– This month, we successfully launched automated terrorist content alerts powered by the Terrorist Content Analytics Platform (TCAP). The TCAP sends email alerts to tech companies when identifying terrorist content hosted or shared on their platform. In the first stage, we have included over 60 small, medium and large tech platforms representing various online services, including social media, file-hosting and content streaming.

– We hosted our 2nd session of the TCAP office hours in early November. This is one of the steps we are taking to ensure that the platform is developed in a transparent manner.

– Earlier this month, we published an article on Vox-Pol about our Terrorist Content Analytics Platform (TCAP), detailing how it is being developed in a “transparency by design” approach. Our piece responded to an article by the Electronic Frontier Foundation’s (EFF) article outlining concerns with automated moderation tools.

– In late November, our Director, Adam Hadley, took part in the third INTERPOL-UNICRI Global Meeting on Artificial Intelligence for Law Enforcement, presenting during a panel dedicated to “Tapping into AI to Fight Crime – Terrorist Use of the Internet and Social Media”.

– Throughout October and November we conducted the Online Regulation Series, focusing our knowledge-sharing efforts on the state of global online regulation. During the Online Regulation Series we published 17 country-specific blog posts as well as 3 blog posts on tech sector initiatives and insights from academia:

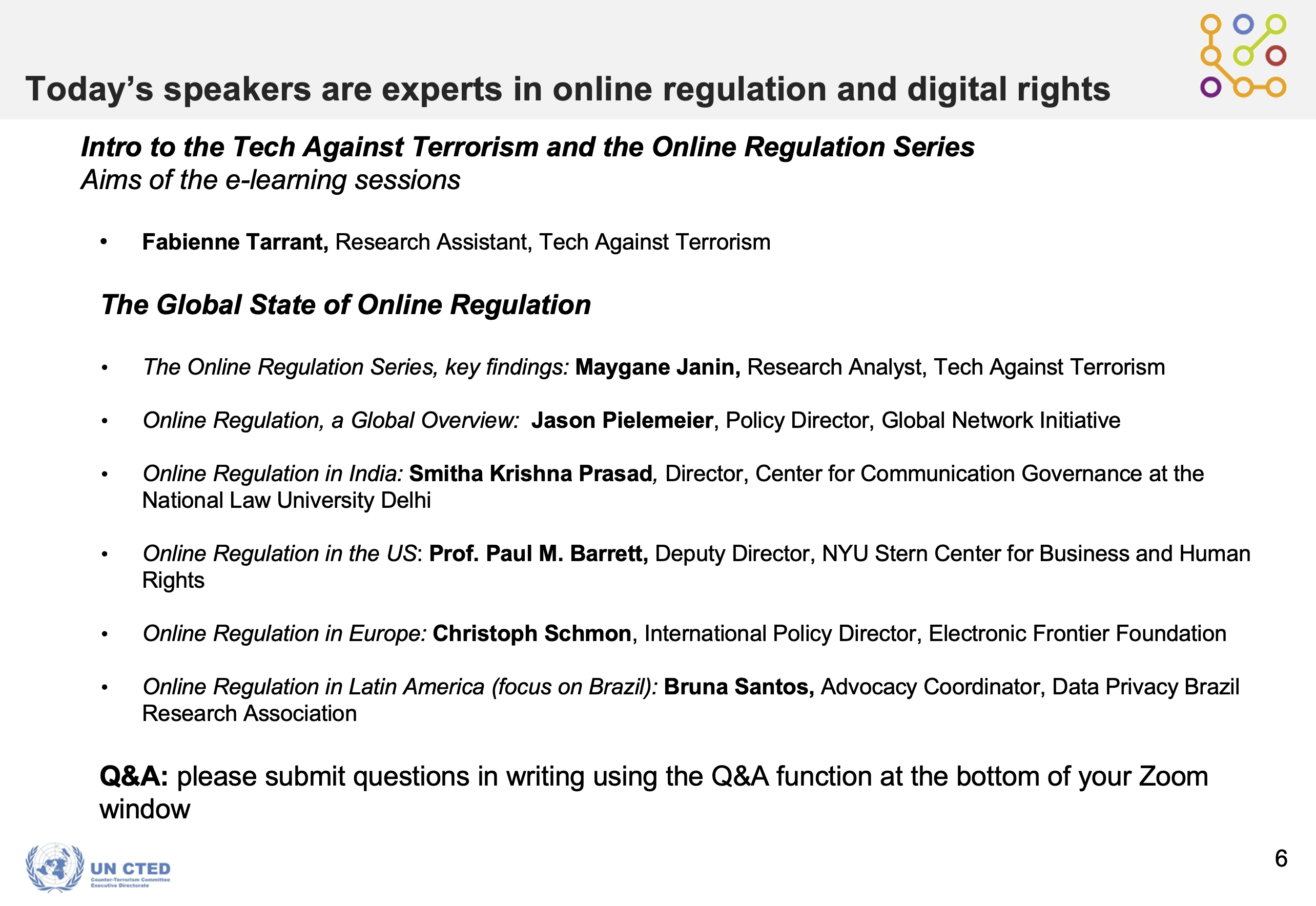

– We concluded the Online Regulation Series with a webinar on The State of Global Online Regulation, welcoming tech policy and digital rights experts to share insights on key regulations around the world that might shape the future of online regulation. If you would like to access a recording of this webinar, you can reach out to us at contact@techagainstterrorism.org

Media coverage

– Following a terrorist attack in Vienna on Monday, 2 November, our Director, Adam Hadley, discussed terrorist use of the internet, in particular of social media, and the related challenges of identifying terrorist content at scale with John Pienaar on Times Radio.

– Our director, Adam Hadley, as founder of our parent organisation the Online Harms Foundation, was quoted in an article on a proposal made by the UK Labour Party to introduce fines for tech companies failing to act to “stamp out dangerous anti-vaccine content“.

Upcoming

– How can UN agencies support the counterterrorism efforts of smaller tech platforms whilst safeguarding human rights and freedom of expression? What are the existing avenues of cooperation between tech platforms and intergovernmental organisations? In our upcoming webinar, “Cooperation Between the UN and Smaller Tech Platforms in Countering Use of the Internet for Terrorist Purposes”, we aim to shed light on these issues. The webinar will be held on Wednesday, 9 December, 4pm GMT. You can register here.

Organised in partnership with UN CTED.

– When tackling terrorist use of the internet, is content removal really our only option? Our upcoming webinar - on Wednesday, 16 December, at 5pm GMT - will look at what alternative steps tech companies can take. We have an exciting panel of experts and practitioners lined up – don’t forget to register here.

Don't forget to follow us on Twitter to be the first to know when a webinar is announced.

Reader's Digest 4 December

Top Stories

– MEGA, the cloud storage and file hosting service, has published its 6th annual transparency report, covering October 2019 to September 2020. The report provides detailed information about Mega’s content moderation practices, including the removal of “objectionable (illegal) content”. Mega’s report is a commendable example of how a fully end-to-end encrypted service can cooperate with law enforcement and take action against illegal content.

You can read Tech Against Terrorism blog posts about transparency reporting for smaller tech platforms here.

– SIRIUS, Europol’s project on cross-border access to electronic evidence, has released its 2nd annual report: EU Digital Evidence Situation Report. The report highlights an increase of 14.3 % in government requests for user information, from 2018-2019. It also shows that transparency reports published by tech companies on requests they receive from law enforcement and governments are a key source of information to assess the volume of such requests.

– The Internet & Jurisdiction Policy Network has published its latest Outcome on User Notification in Online Content Moderation. This brief develops on the different stages of user notification and outlines different ways for it to be implemented “meaningfully”.

– The Facebook Oversight Board has announced that it has selected its first six cases – three of which are related to a violation of hate speech policy, whereas the other ones are violations of the dangerous individuals and organisations, incitement to hatred, and nudity and sexual activity policies. Since October, the Oversight Board has received more than 20,000 cases for review. The Board has also announced the appointment of 5 new trustees for the Oversight Board Trust.

– Germany has banned far-right violent extremist group the Wolf Brigade 44, stating that the group aims to establish a Nazi state. This announcement followed a police raid during which “a crossbow, machete, knives and Nazi symbols” were discovered.

Tech Policy

Social networks: is friction the future of moderation? (Réseaux sociaux : la friction est-elle l’avenir de la modération ?): Lucie Ronfaut reflects here on Twitter’s latest retweet update. Introduced a few weeks before the US election, in order to prevent attempts to undermine it, the update prompts users to “Quote Tweet” instead of simply retweeting, thus introducing an extra step in the user experience. Based on this, Ronfault, develops on the idea of introducing friction, “small digital barriers”, in user experience to push users to think through before posting something online. In particular, she focuses on how these barriers can be used as an alternative content moderation strategy, instead of sole content deletion. Through this “friction” strategy, the role of the spread and virality of content is questioned, in particular in how it can contribute to the diffusion of hateful content. Dominique Boullier, a professor at SciencePo Paris and an expert in the sociological impact of social media, has argued that by limiting the number of a user’s daily posts, users would have to prioritise what they want to publish, which could lead to less hateful content. That way, freedom of diffusion would be impacted rather than freedom of expression, according to Boullier. However, Ronfault concludes that such processes of friction are still rare, as they question the moderation model of social media, and introduce the users to a moderation process that was largely invisible to them until recent. (Ronfault Lucie, Liberation, 29.11.2020, article in French)

Islamist Terrorism

The Radicalization of Bangladeshi Cyberspace. Robert Muggah dwells on the expansion of terrorist online space in Bangladesh. Muggah argues that “online radicalization is widespread and increasingly normalized” in the country, as demonstrated by the Bangladeshi police stating that over 80% of people arrested for terrorism in recent years had been radicalised online. Whereas the country has been relatively exempt from terrorist violence in recent years, Muggah warns that a wave of extremism could be reignited in the country as misinformation spreads and online extremism grows. He stresses the importance of content available in local dialects and made avoid law enforcement and social media platforms’ detection. To demonstrate this, he takes the case study of al-Qaeda in the Indian Subcontinent (AQIS), which is quite active on social media via “skilfully produced videos and viral content.” Muggah also stresses that the Covid-19 pandemic has offered new opportunities for AQIS to exploit, as internet use exploded in Bangladesh and AQIS published content related to the pandemic. Muggah concludes with hopeful signs for efforts to counter the radicalisation of the online space in Bangladesh, as social media platforms are strengthening their monitoring capacity, and local authorities are working with the UN to tackle extremism online and offline. (Muggah, Foreign Policy, 27.11.2020)

Counterterrorism

Of Challengers and Socialisers: How User Types Moderate the Appeal of Extremist Gamified Applications: In this article,Linda Schlegel sheds light on the relation between gamification, “the use of game elements in non-game contexts”, and online radicalisation. In particular, she focuses on how different types of online users, based on a typology of video game players, can experience different online radicalisation processes and be targeted differently by recruiters using gamified propaganda. Schlegel distinguishes between competitive users on the one hand, and socially-driven users on the other. Competitive users, or challengers, are motivated by competition and comparing themselves to others, and can be motivated by the idea of “level[ing] up”. She assesses that they are more likely to engage “thoroughly with the propagandistic content and the group’s narrative”, without noticing it as they are “playing”. Challengers who are also “status seekers” are targeted by recruiters who offer them opportunities for higher status in the organisation. On the contrary, socialisers are motivated by the “feeling of belonging to an online community”. Given that radicalisation is often a social endeavour, she argues that such users are more likely to engage with a group’s propaganda and radical ideas in an attempt to gain points by connecting with others. Schlegel concludes by calling for further research into the intricacies of gamification and radicalisation, to help understand the role of gamification in the radicalisation of extremist sub--cultures and how to develop effective counter-measures. (Schlegel, GNET, 02.12.2020)

Counter-Radicalization and Female Empowerment Nexus: Can Female Empowerment Reduce the Appeal of Radical Ideologies? In this article, Nima Khorrami investigates whether female empowerment could support effective counter-radicalisation strategies. She begins by analysing discourses on gender and the role of women amongst violent extremist groups. She argues that behind different public discourses, far-right and Islamist extremists share similar views on women and gender. Namely, that women “are to be protected and care[ed] for” and that gender equality is “part of a globalist liberal agenda”. She continues on analysing how feminist literature has shown that women experience socio-political and economic issues differently than men due to a number of factors, including societal norms and their roles in society. According to Khorrami, this observation could be expanded to how women “experience and understand violence, terrorism and extremism in vastly dissimilar ways to their male counterparts”. Based on that, and supporting her argument by existing gender equality components in counter violent extremism at the UN level, she calls for a greater understanding of women’s involvement in extremism and terrorism, and for increased recruitment of women in security forces in order to strengthen counter-radicalisation. More importantly, she argues for greater female empowerment in order for women to be able to act on their awareness of radicalisation processes around them, given their “central roles in families and communities”. (Nima Khorrami, European Eye on Radicalization, 30.11.2020)

Press Release: Tech Against Terrorism Participates In EU-Wide Exercise On TUI

17 September 2019 - Press Release: Tech Against Terrorism participates in EU-wide exercise hosted by Europol to tackle terrorist use of the internet ...